MASAKO YANO

Published on Jun 14, 2022

HENNGE, the self-proclaimed “Technology enthusiasts”, introduces various new technologies on a daily basis. In May 2022, leveraging the latest AI service, HENNGE produced a video presentation of the company’s financial results, featuring avatars of our CEO and Executive Vice Presidents.

We invited Ms Aoe from the Business Planning & Analysis Division, who is in charge of investors’ relations (“IR”), to talk about why and how such “world’s first” avatar financial results video was created.

Hello! Yes, that’s right!

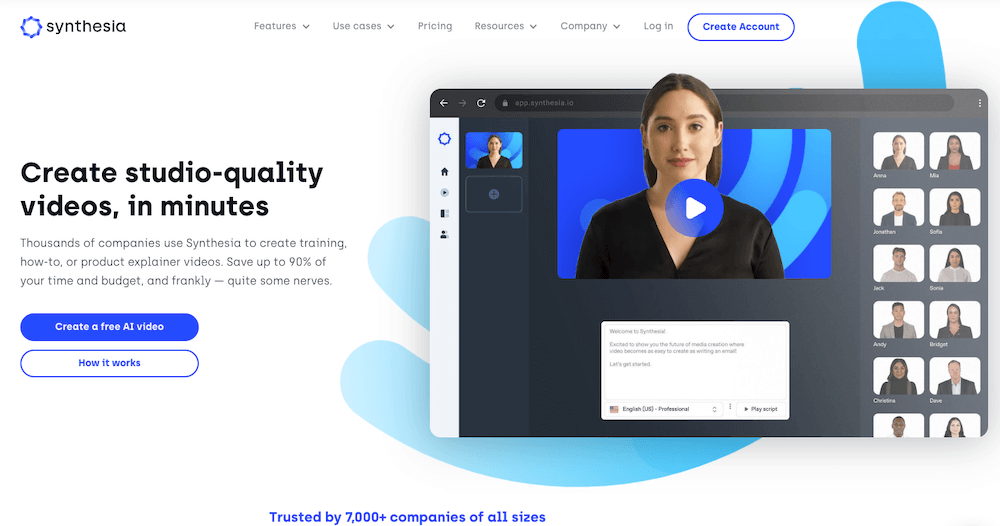

We have utilized the video platform service, called “Synthesia STUDIO“, leveraging AI (artificial intelligence) to create the video.

Both of them blinked, and their facial and body movements were so realistic. There was nothing strange compared to real Ogura-san and Amano-san, themselves.

Real Image of Ogura-san Utilized to Create His Avatar

Thank you very much. We were glad to receive so many positive reactions, both inside and outside the company for the video.

Where did you get the idea for this avatar video?

There were two reasons. The first one was that we simply wanted to try out the latest tool. Mr. Amano found an article about Synthesia STUDIO, and we thought it would be interesting to create our own avatars. So, first, we tried it out within the IR team. We were so impressed with the quality that we decided to deploy it officially.

Isn’t that exactly the idea of HENNGE’s value, We are willing to try out new technology, which are just like unripe fruits, in order to realize the “Liberation of Technology”. HENNGE members seem to be really good at following the latest IT trends and proactively leveraging them.

That is so true. According to Synthesia in the U.K, the provider of Synthesia STUDIO, HENNGE was the first to adopt this technology for IR in the world.

First in the world?! Isn’t that amazing! It is so HENNGE that we are not afraid of making mistakes and pursue new challenges.

And what was the second reason?

The second reason was that we wanted to streamline the video production process. We make the video on the financial results every quarter, but since Ogura-san and Amano-san had to constantly look at the camera and talk about the financial results in both Japanese and English, it was much more difficult than what I had imagined. If they made mistakes, we would have to retake one part of the video; in that case, the audio and the lighting would usually not look right even after being edited and connected. Even if the session was successfully taken, multiple people had to check if the facial expression was natural, if there was no mistake, and so on.

I did not know it was that tough!

By utilizing the Synthesia STUDIO, I can see that they were able to focus on presenting without worrying about the camera, and their avatars will match the mouth movements. There was no need to retake. So how did you create their avatars in the first place?

We rented a studio and took three videos of Ogura-san and Amano-san, speaking in front of a green-colored background. Then we sent the data to Synthesia, and after about 10 business days, we received the avatars.

That was fast! What did they have to say in the videos sent to Synthesia?

It can be anything in English or Japanese, as long as the speaker is comfortable and relaxed while talking about it. It can be a company overview, or a Japanese fairy tale, like Momotaro!

I see. Then the avatars and the recorded voice are combined to complete the video explaining the financial results?

Yes. The Japanese version of the video was created as such. As for the English version, the voice was cloned as well!

Oh, really?! I did not notice, since both Ogura-san and Amano-san’s pronunciations sounded so real. If you did not mention their voices were cloned and synthesized, nobody would know!

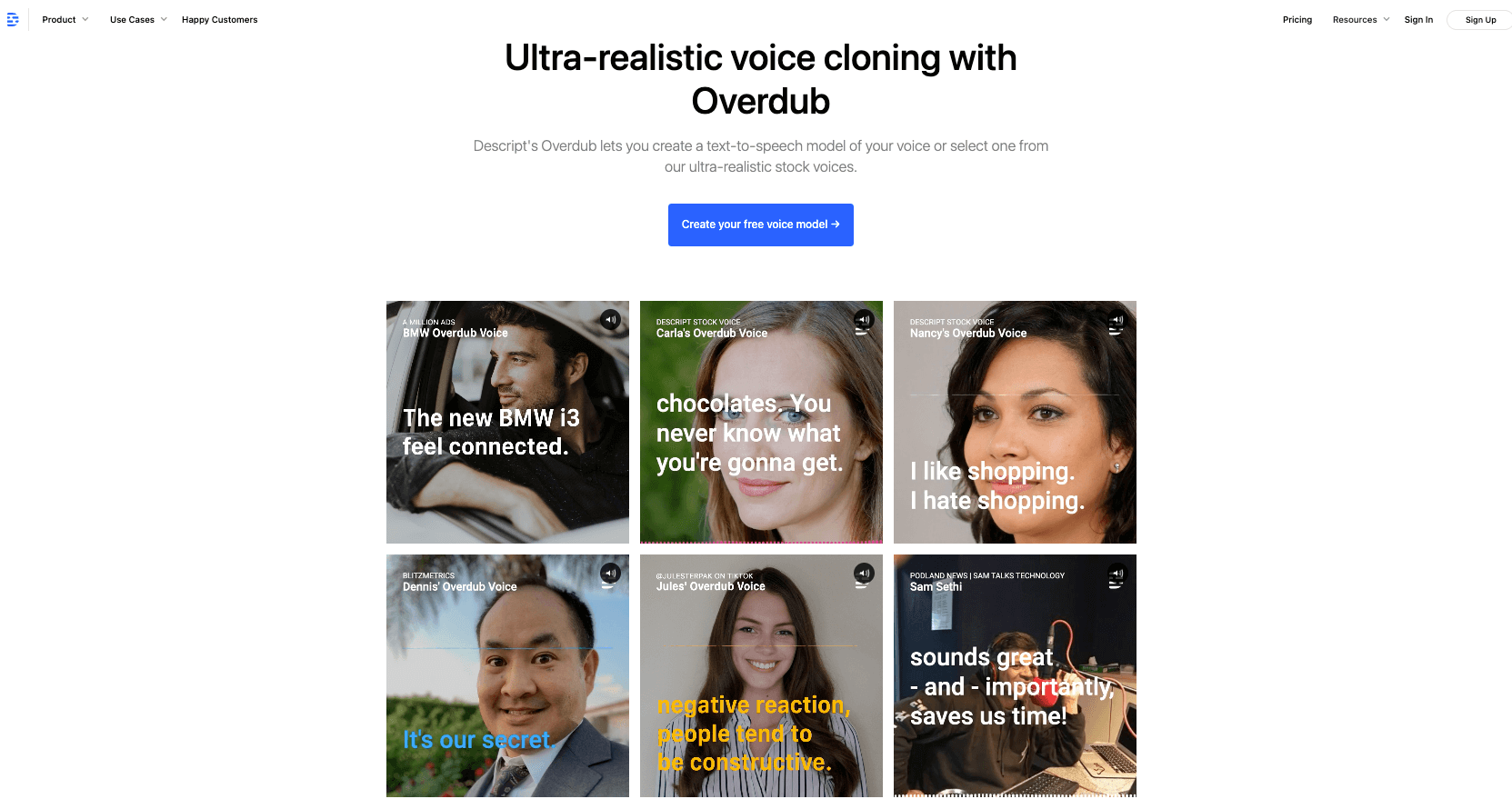

Thank you so much! For their voices, we utilized the text-to-speech service called Overdub, provided by Descript in the U.S. By sending the English-speaking audio data of Ogura-san and Amano-san, the system will pick up their voices and read out the input text. This service did not support Japanese, so we utilized it only for the English version of the video this time.

Once such data is created, it can be reutilized for other projects, which would be very useful. Was there any points to pay attention to when utilizing avatars and synthesized voices for our IR activities?

Avatars and voice synthesizers are really great technology, but they should not be misused. Therefore, the data must be managed securely. We cannot share the details, but we do have ways to distinguish the avatars from real Ogura-san and Amano-san themselves.

Indeed, we definitely need to consider the risk of misuse.

Also, we made sure that the important message will not be lost in the process of utilizing avatars in our public IR video.

Our expectation is that this video would be one way to symbolize the HENNGE values that I mentioned at the beginning, and people will become more open to the possibility that one day it will be normal for everyone to have their own avatars.

This video certainly presented one of the unknown possibilities of IT. Was there any challenge in utilizing avatars and cloned voices?

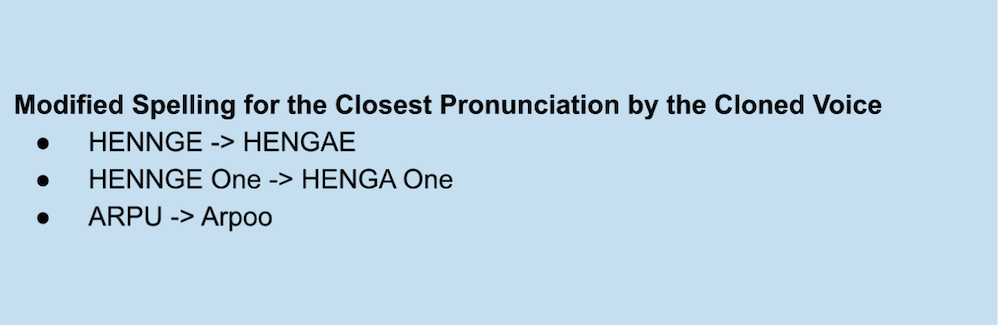

The video with avatars was quite easy to make even for someone with little video editing experience like me. On the other hand, adjusting the pronunciation of the cloned voices was quite difficult. For example, our company name, “HENNGE”, is pronounced as “Henj” by Overdub, so we have to change the spelling of the text to “HENGAY” or “HENGAI”.

It is totally unexpected that our company name was already a challenge. So which spelling worked best?

It was not perfect but “HENGAE” was the closest. Another example was “SaaS”, which was pronounced as “Sais” by the cloned voice. I think that pronunciation will remain a challenge in the future.

Industry-unique vocabularies and Japanese pronunciation must be complicated. Despite these challenges, are you going to continue creating the video on the financial results in the same manner?

We will see the reactions of our investors. So far there was no negative response, so we would like to continue leveraging avatars and the cloned voices for a while.

I heard that our Sales Division has started to utilize avatar videos for their events as well!

I am glad that the method is getting adopted. We would like to continue making changes, that is “HENNGE”, both inside and outside the company by leveraging new technologies!

Thank you so much for your time!

MASAKO YANO

Published on Jun 14, 2022

Hello!

HENNGE’s financial settlement video released in May 2022 was amazing! Was it really the avatars of our CEO Ogura-san and Executive Vice President Amano-san in the video?